Cross Validation In Machine Learning Dataaspirant

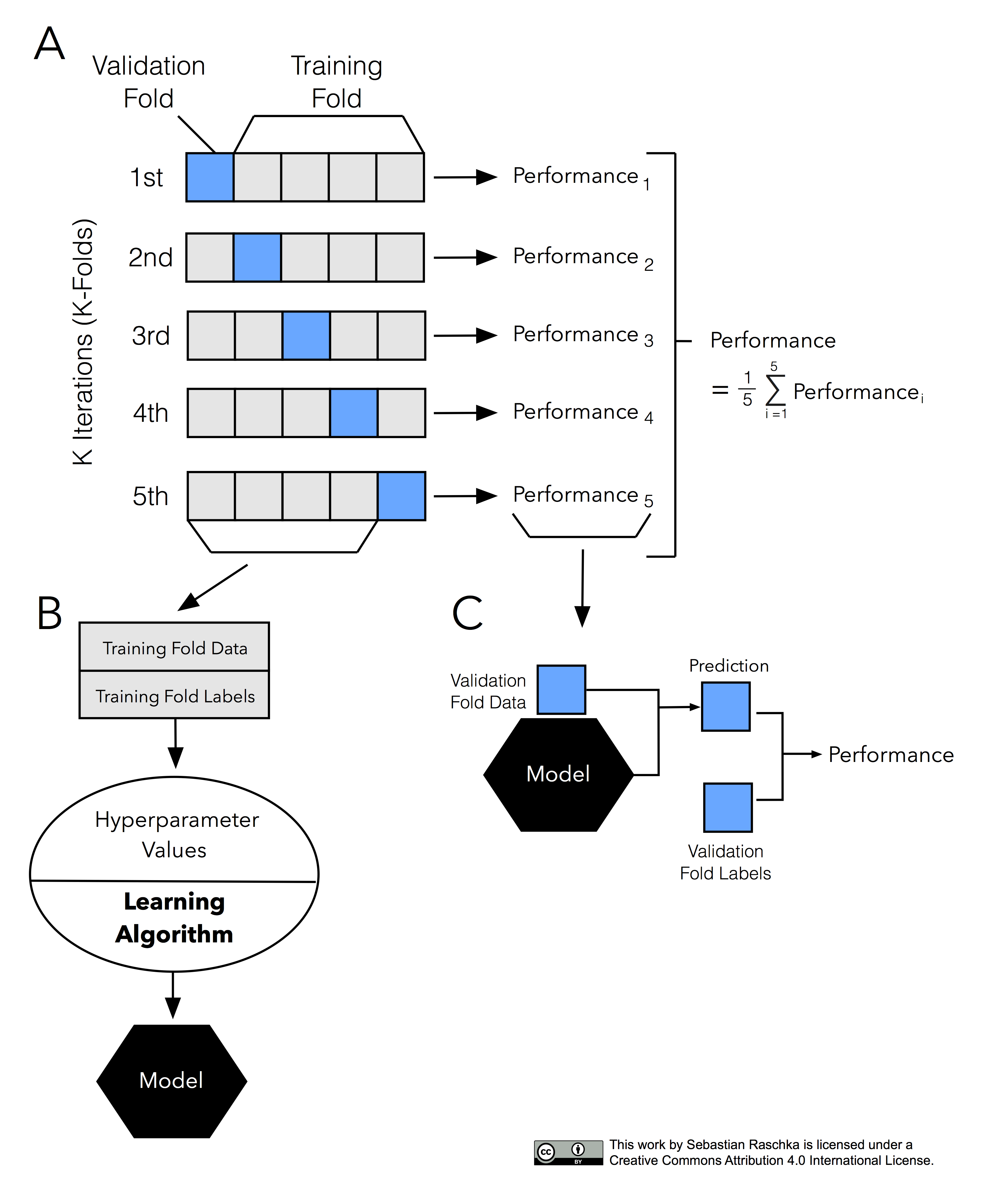

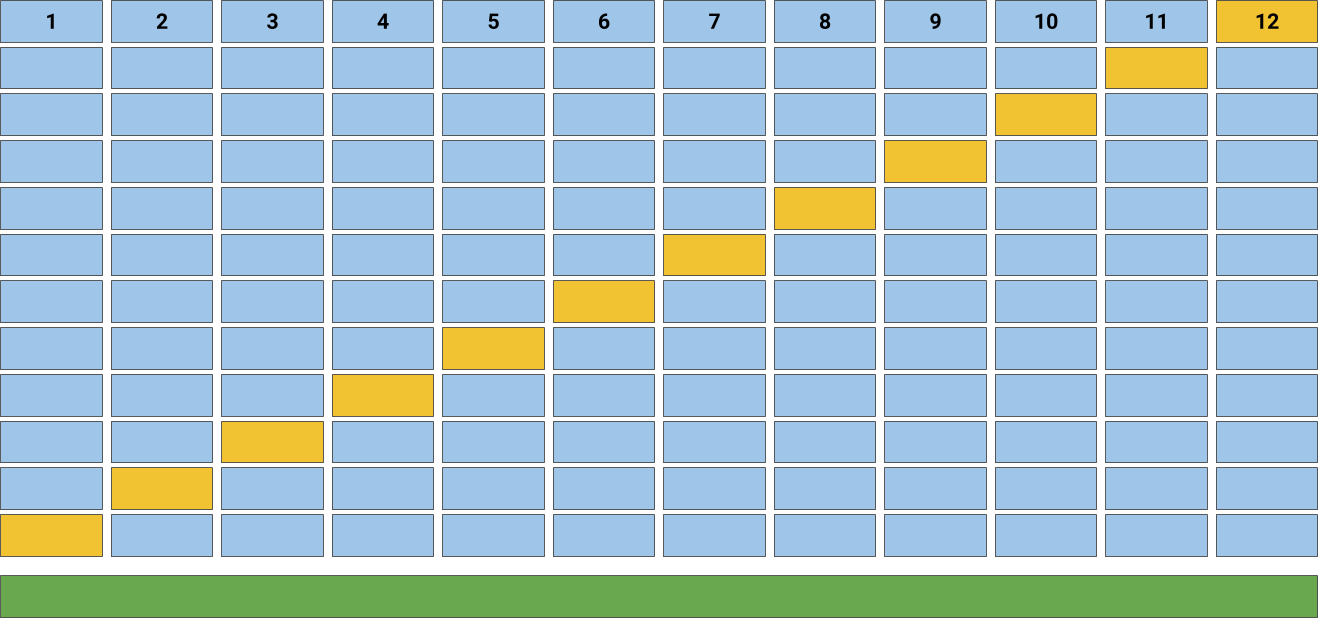

In this code, we split the data into 10 subsets using Kfold (from sklearn.model_selection). KFold handles cross-validation subset splitting and train/val assignments. In particular, the Kfold.split method returns an iterator which we can loop through. On each loop, this iterator assigns a different subset as validation and returns new training.

CrossValidation strategies for Time Series forecasting [Tutorial

Oct 30, 2019. Cross Validation is a technique used in Machine Learning where each of the data points are used for training and testing the data set. This helps us to identify the better algorithm.

Importance of Cross Validation Are Evaluation Metrics enough

Cross-Validation (CV) is one of the key topics around testing your learning models. Although the subject is widely known, I still find some misconceptions cover some of its aspects.. We will use again Sklearn library to perform the cross-validation. from sklearn.model_selection import LeaveOneOut cv_strategy = LeaveOneOut() # cross_val_score.

What is Cross Validation in Machine learning? Types of Cross Validation

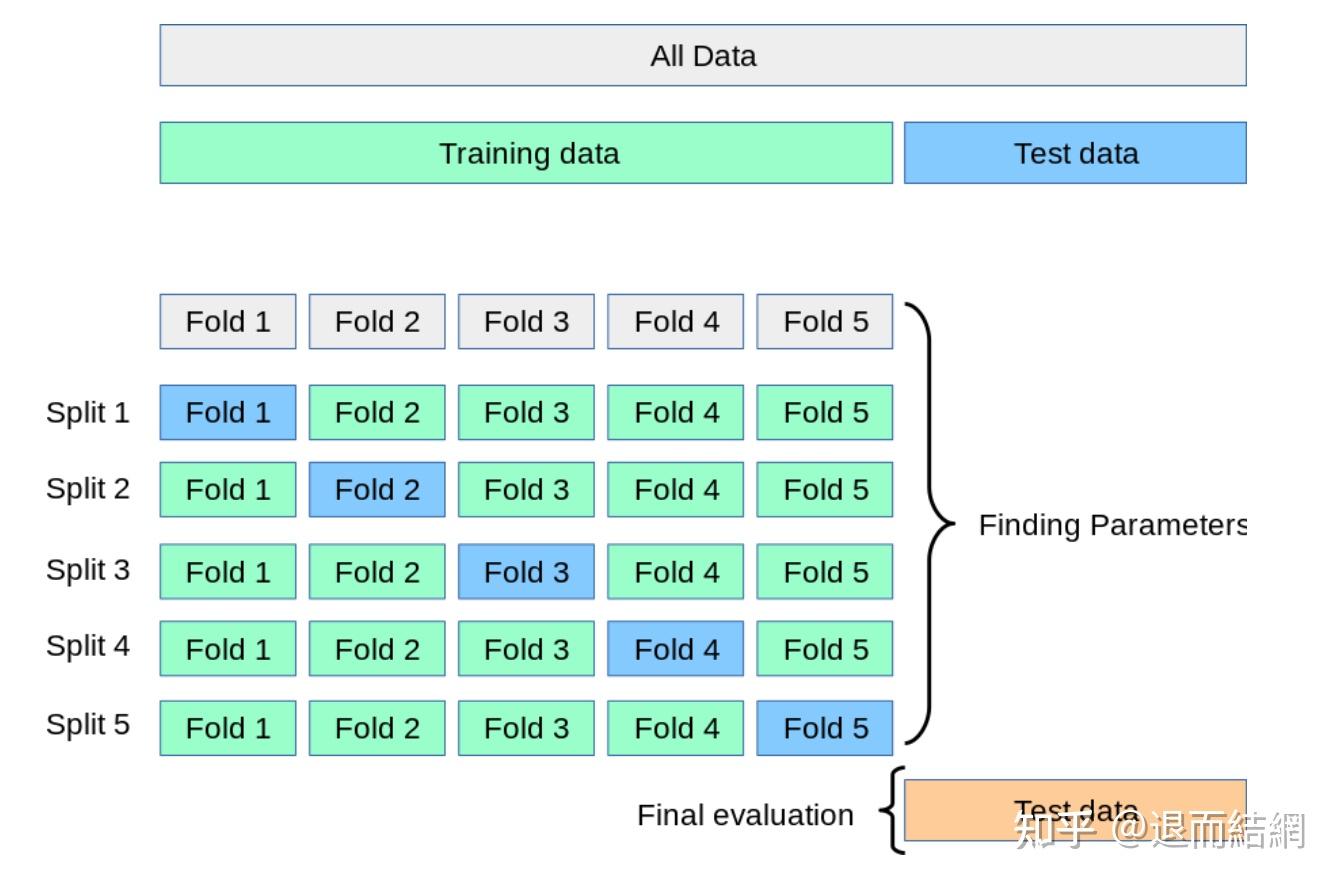

Summary. In this tutorial, you discovered how to do training-validation-test split of dataset and perform k -fold cross validation to select a model correctly and how to retrain the model after the selection. Specifically, you learned: The significance of training-validation-test split to help model selection.

Model evaluation, model selection, and algorithm selection in machine

Proper Model Selection through Cross Validation. Cross validation is an integral part of machine learning. Model validation is certainly not the most exciting task, yet it is vital to build accurate and reliable models. In this article, I will outline the basics of cross validation (CV), how it compares to random sampling, how (and if) ensemble.

Best approach for model selection Bayesian or crossvalidation? Cross

Commonly used approaches to model selection are based on predictive scores and include information criteria such as Akaike's information criterion, and cross validation. Based on data splitting, cross validation is particularly versatile because it can be used even when it is not possible to derive a likelihood (e.g., many forms of machine.

Flowchart for cross validation. a The 5fold cross validation process

Abstract. Used to estimate the risk of an estimator or to perform model selection, cross-validation is a widespread strategy because of its simplicity and its (apparent) universality. Many results exist on model selection performances of cross-validation procedures. This survey intends to relate these results to the most recent advances of.

Model evaluation, model selection, and algorithm selection in machine

In this external validation, we used non-parametric methods for binding abundance and controlled false discovery rate in group comparisons. For predictive modelling, we used logistic regression, model selection methods and cross-validation to identify clinical and peptide variables that predict SSA− SjD and FS positivity.

Cross Validation and Model Selection Python For Engineers

But consider a crucial point: we'll report 0.97 (via the nested cross-validation procedure) as our generalization estimate, not the 0.99 we got here. That's it! You are now equipped with the nested cross-validation technique, to select the best model and optimize hyperparameters! Sample code

Model selection Cross validation YouTube

On this ground, cross-validation (CV) has been extensively used in data mining for the sake of model selection or modeling procedure selection (see, e.g. Hastie et al., 2009). A fundamental issue in applying CV to model selection is the choice of data splitting ratio or the validation size n v , and a number of theoretical results have been.

(PDF) Cross validation model selection criteria for linear regression

2. AIC and BIC explicitly penalize the number of parameters, cross-validation not, so again, it's not surprising that they suggest a model with fewer parameters - this seems to be a broadly incorrect conclusion based on a false dichotomy. The asymptotic equivalence of between AIC/BIC and certain versions of cross validation shows that.

Crossvalidation and hyperparameter tuning Data Science Central

Next, to implement cross validation, the cross_val_score method of the sklearn.model_selection library can be used. The cross_val_score returns the accuracy for all the folds. Values for 4 parameters are required to be passed to the cross_val_score class. The first parameter is estimator which basically specifies the algorithm that you want to.

Model selection and cross validation techniques

Step 1 - Fit the model to all available data, using the function fit_model. This gives you the model that you will use in operation or deployment. Step 2 - Performance evaluation. Perform repeated cross-validation using all available data. In each fold, the data are partitioned into a training set and a test set.

Crossvalidation accuracy of genomic selection models for predicting

The Accuracy of the model is the average of the accuracy of each fold. In this tutorial, you discovered why do we need to use Cross Validation, gentle introduction to different types of cross validation techniques and practical example of k-fold cross validation procedure for estimating the skill of machine learning models.

Cross Validation Scores — Yellowbrick v1.5 documentation

Cross validation and model selection¶ Cross validation iterators can also be used to directly perform model selection using Grid Search for the optimal hyperparameters of the model. This is the topic of the next section: Tuning the hyper-parameters of an estimator. 3.1.5. Permutation test score¶

交叉验证总结 知乎

Key words: cross validation, information theory, model selection, over tting, parsimony, post-selection inference 1. Model selection uses the available data to compare and select amongst a set of candidate mod-els for the typical purpose of inference and/or prediction. The set of models correspond to

- Problemas De Unidades De Medida 6 Primaria Con Soluciones Pdf

- Almacenes De Ferreteria Industrial En Malaga

- Coordenadas Cilindricas Y Esfericas Integrales Triples

- Muñecas Alicia De Basa Peru

- Alemania Y Holanda Contra España

- Como Ser Proveedor De Walt Disney

- Cortar Papas Para Papas Fritas

- Poses Para Fotos En Montaña

- 2022 Lexus Es Studio City

- Como Eliminar Las Espinillas De La Nariz